Autoencoders have earned a significant place in the world of artificial neural networks due to their remarkable versatility. These neural networks find applications in various domains, including image and speech recognition, anomaly detection, and data compression.

The Encoder and Decoder, at the very core of autoencoders, are two essential parts of this architecture. The encoder’s primary role is to perform data compression by mapping input data into a lower-dimensional representation, often referred to as the encoding or latent space. The encoder does this by applying various transformations to capture the most critical features of the input data. Think of it as a funneling process where a wealth of information is distilled into a more compact form. On the other hand, the decoder’s mission is to reconstruct the original input data from this lower-dimensional representation. In essence, it reverses the encoding process, attempting to generate data similar to what the encoder compressed. This back-and-forth compression and decompression process are what make autoencoders intriguing and highly versatile.

Now, let’s focus on the Bottleneck Layer that lies between the encoder and decoder. This layer is pivotal because it plays a central role in the autoencoder’s ability to learn a compact representation of the input data. Typically, the bottleneck layer has a significantly lower dimension than the input data. This dimensionality reduction forces the autoencoder to capture only the most important features of the input, discarding less relevant information. The bottleneck layer essentially acts as a constraint, compelling the autoencoder to find the most efficient way to represent the data, which is invaluable for various applications.

Applications of Autoencoders

In Image Denoising, autoencoders prove their mettle by efficiently removing noise from images while preserving their essential features. The process involves training the autoencoder on noisy images and having it learn to produce clean versions. This capability is particularly vital in medical imaging, where clear and accurate images are paramount for precise diagnosis. By leveraging autoencoders for image denoising, healthcare professionals can improve the quality of medical scans, leading to more reliable diagnoses and better patient outcomes.

Another critical application lies in Anomaly Detection, where autoencoders are powerful tools. The approach involves training the autoencoder on a dataset of normal, expected data. The network learns to reconstruct this normal data accurately. When confronted with an anomalous or unexpected data point, the autoencoder struggles to reconstruct it effectively, resulting in a significantly higher reconstruction error. This heightened error serves as a clear signal for detecting anomalies. This capability is invaluable in fields such as cybersecurity, where identifying unusual patterns in network traffic can help detect potential threats. Similarly, in industrial quality control, autoencoders can be used to identify defective products on assembly lines by recognizing anomalies in sensor data.

These examples illustrate how autoencoders, with their unique ability to capture essential features and identify deviations from expected patterns, have found a significant foothold in real-world applications. Whether it’s enhancing image quality in medical imaging or safeguarding computer networks from cyber threats, autoencoders have demonstrated their worth as a versatile and powerful tool in the realm of artificial neural networks.

Implementing Autoencoders with Keras and TensorFlow

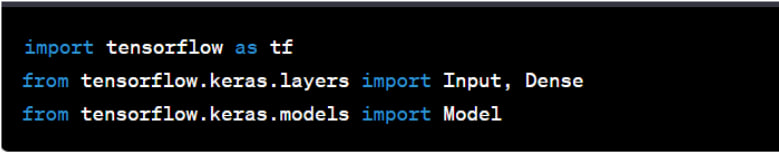

Step 1: Import Dependencies

The start into autoencoder implementation begins with the importation of essential libraries. This preliminary step ensures that you have access to the necessary tools and functionalities required to construct and train your autoencoder. In the world of deep learning, Keras and TensorFlow have emerged as two of the most powerful and user-friendly libraries. By importing these libraries, you tap into a vast ecosystem of pre-built functions and modules that streamline the development process.

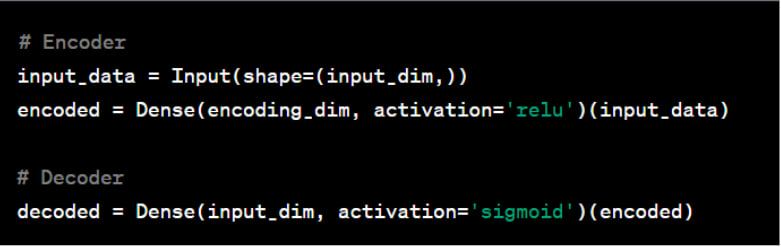

Step 2: Define the Encoder and Decoder

With the foundational libraries in place, the next critical step involves defining the encoder and decoder components of the autoencoder using Keras layers. This is where you shape the architecture of your neural network.

In this code snippet, `input_dim` signifies the dimensionality of your input data, while `encoding_dim` represents the dimensionality of the encoded representation. The encoder typically employs activation functions like ReLU (Rectified Linear Unit) to introduce non-linearity into the network, allowing it to capture complex patterns in the data.

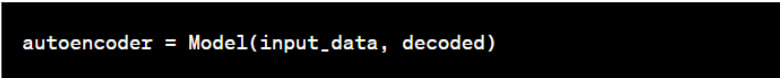

Step 3: Create the Autoencoder Model

Having defined the encoder and decoder, you proceed to merge these components to form the complete autoencoder model. This model encapsulates the entire architecture and functionality of your autoencoder.

This step solidifies the connection between the encoder and decoder, allowing you to perform end-to-end data compression and reconstruction.

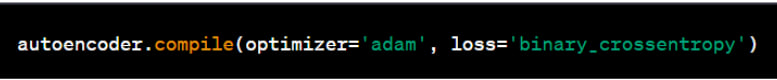

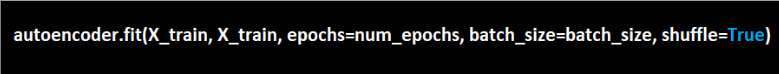

Step 4: Compile and Train

Now comes the practical part of training your autoencoder. After defining the model, you need to compile it with appropriate settings, such as the choice of optimizer and loss function. The choice of optimizer can significantly impact the training process, with options like ‘adam’ being commonly used for its efficiency.

Once compiled, you initiate the training process using your dataset. This involves feeding your data to the autoencoder, allowing it to learn the mapping from input to encoding and decoding, iteratively improving its performance over a specified number of epochs.

The `X_train` data represents your training dataset, while `num_epochs` and `batch_size` control the training duration and batch size, respectively. Training the autoencoder involves adjusting the internal weights and biases of the neural network to minimize the reconstruction error between the input and the output, effectively fine-tuning the network to capture the most salient features of your data.

Implementing autoencoders using Keras and TensorFlow combines theoretical knowledge with practical application. These libraries provide a robust and user-friendly framework for constructing, training, and deploying neural networks, making complex tasks like building autoencoders accessible to a wide range of developers and researchers. Armed with this practical know-how, you can confidently apply autoencoders to various domains, leveraging their capabilities to solve real-world problems and uncover hidden patterns in your data.